When you change the output image's X-resolution, this scales up the camera render frame's Y-dimension. So far I know that this counterintuitive behaviour has to do with the camera's sensor size (set in the "Camera" panel of the camera' s "Object Data" tab) which is set to Auto per default (unless you choose a sensor preset where this default setting depends on the choosen sensor) and fits the image dimensions into a frame of certain dimensions. If you would go down with the X-demension far enough, that frame would switch growing in the view's y-derection to shrink along the X-axis. With "Horizontal" as sensor fit, the camera's render frame extension in the view's X-axis would be kept while scaling the Y-extension in order to keep the images resolution ration between X and Y. With "Vertical" it would be the complementary with the view's Y-direction being kept.

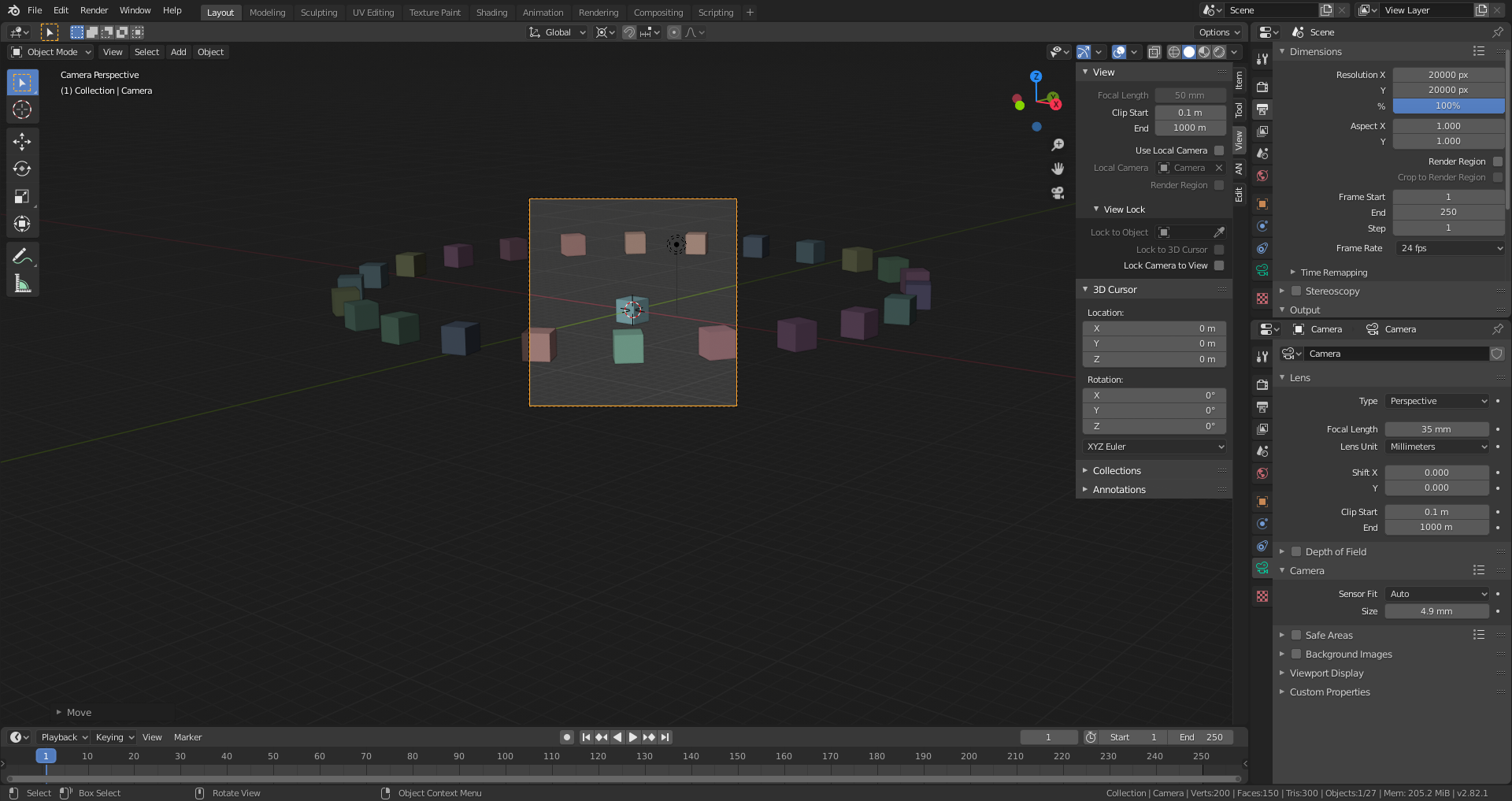

With "Auto" as sensor fit the view angle never exceeds the limits of a squared render frame for equal values in X and Y, even if I choose extremely high values:

As soon as I crank up or down one of both values the scene outcut shrinks in X or Y:

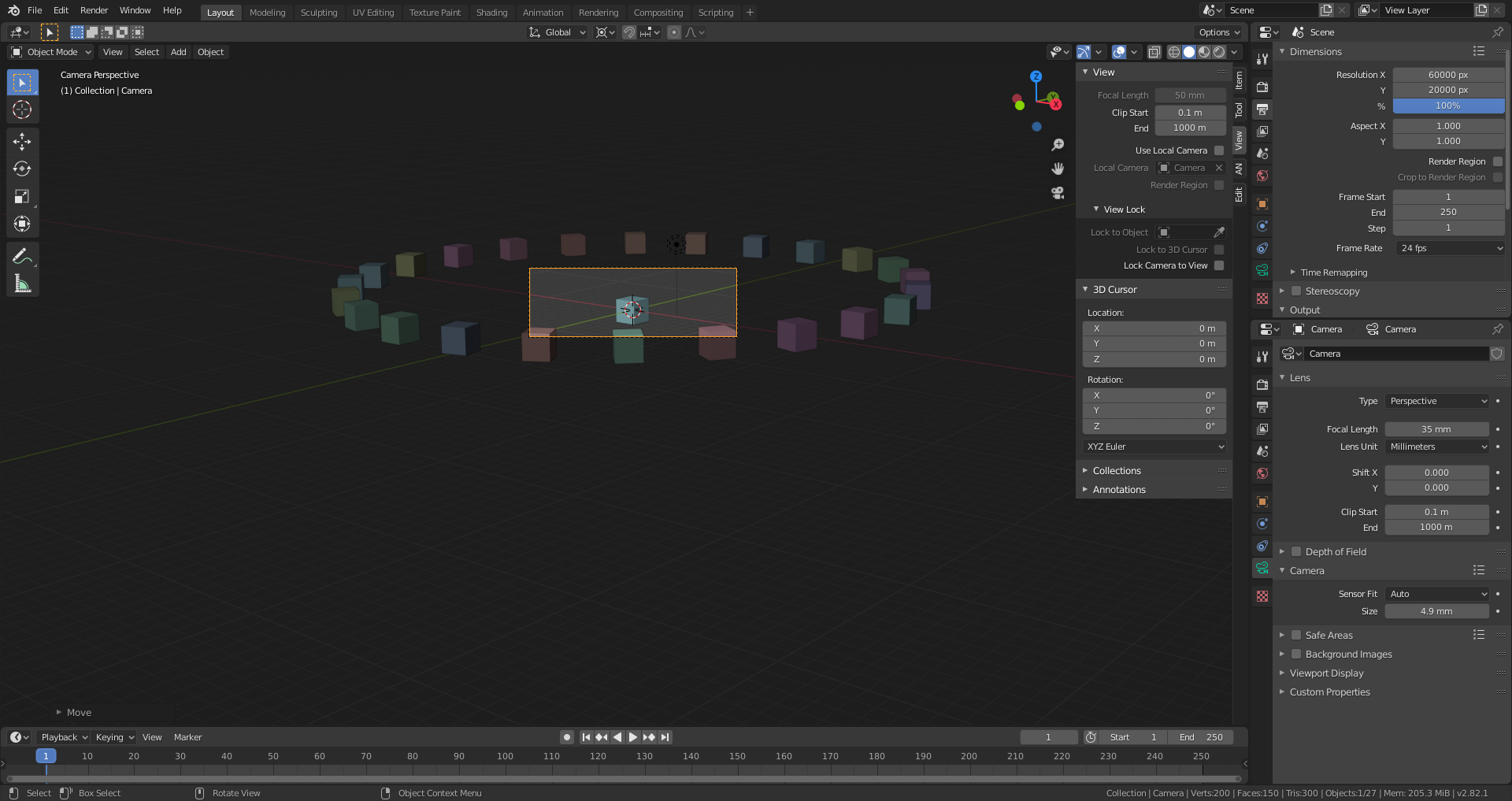

With "Sensor Fit" set to "Vertical" I get a squared scene outcut for equal X and Y but for an growing "X divided by Y" ratio an outcut that can become so wide that I can't zoom out further:

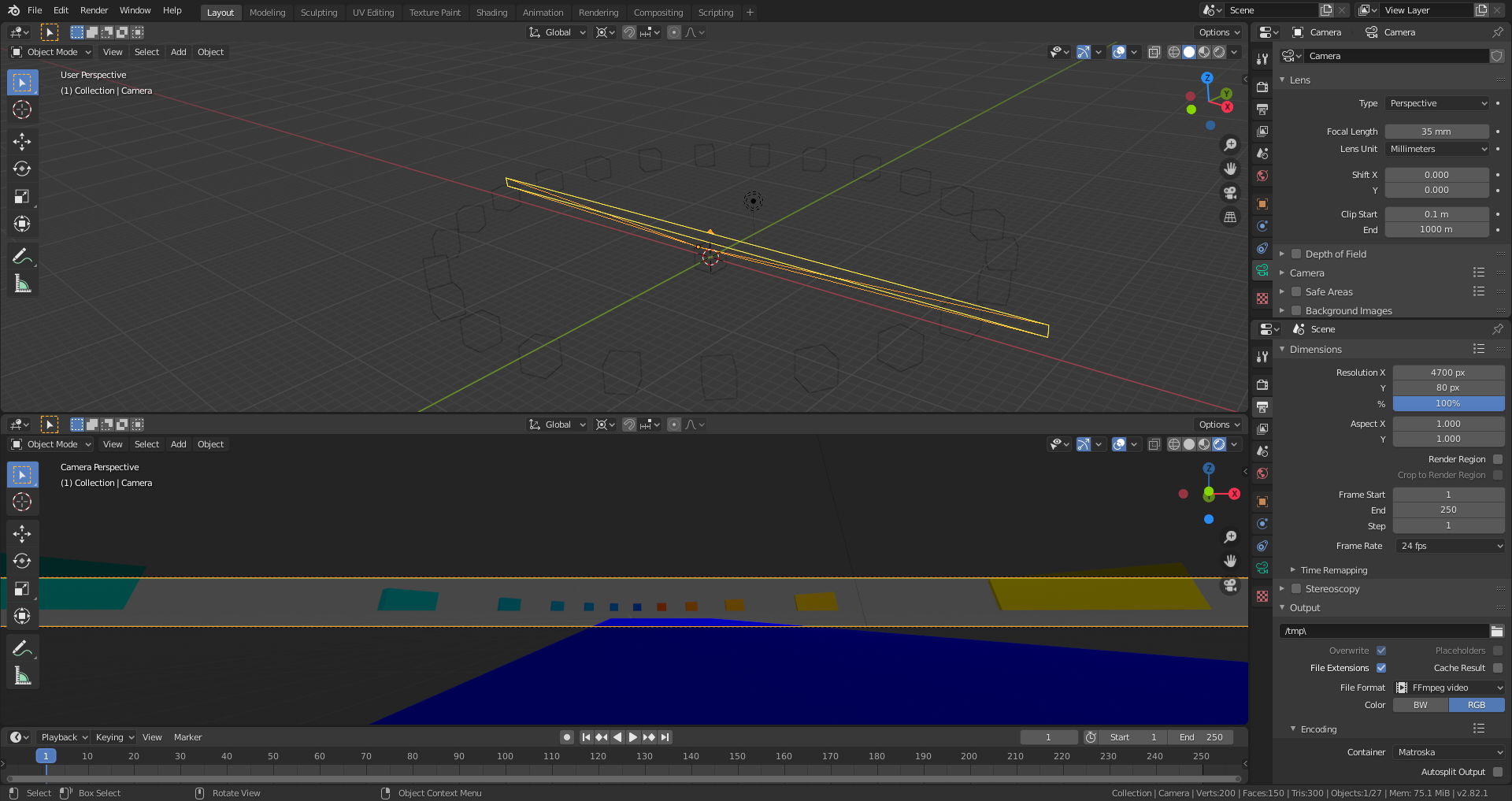

With "Sensor Fit" set to "Horizontal" the same happens in view's vertical direction.

There's also a zoom out of the scene when switching "Sensor Fit" from "Auto" or "Horizontal" to "Vertical"

What sense does this behaviour make? Does this have consequences for how far I can scale up my final rendering when printing it out (for example a wallpaper ;)? And why isn't this scene outcut scaling possible in X an Y simultaneously?

You don't need to play with the sensor size unless you are trying to match a specific real work camera. (It will probably break your brain if you do haha)

The default sensor size is 36mm Auto. It is mean to mimic the "Full Frame" format of shooting onto 35mm film/or digital sensor.

The sensor size has to do with the projection of the subject onto the image plane inside the camera (that's the point where the image is formed inside the camera)

A smaller sensor size is basically cropping out a portion of what is projected, which makes it appear more zoomed (when technically it isn't)

I hope that made sense.

As for the Resolution settings, as far as I know, Blender will always scale the frame of the camera (the orange outline) based on the ratio of the X and Y. That is why 400x300 looks the same as 800x600 in the viewport (but the render output will be less pixilated)

You can test this out by starting with any value that is the same. Say 1000x1000 and then grab one of them and slide it up and down. You will see it change from scaling the horizontal to scaling the vertical size.

If you wanted to print an image at say 300dpi, the best solution I have found is to calculate the print size (real world size) and then multiply by 300.

I usually have to google this every time I do it so it's worth double checking my calculations here but....

If you want something to be 16inches wide by 9 inches high.

Start by setting the X to 16px and then multiply by 300

then set the Y to 9px and multiply by 300.

That will be 4800 x 2700

I think I'm correct in that, but as I said, I always do a quick google before sending any image to a client (that needs stuff for printing....which isn't too often for me)

Anyway, I hope that has been helpful.

A smaller sensor size is basically cropping out a portion of what is projected, which makes it appear more zoomed (when technically it isn't)

I hope that made sense.

Yes, it does make sense since this is the "crop factor" (https://en.wikipedia.org/wiki/Crop_factor). If you print this image from a smaller sensor onto the same format as you did before with an image from a bigger sensor, you have to scale it up which makes it look as if it had been taken with a longer focal length (= zoomed in), but this is an illusion since changing the sensor size doesn't influence the depth of field (DOF) but the focal length does (the longer the focal length the less DOF and the smaller the viewing angle).

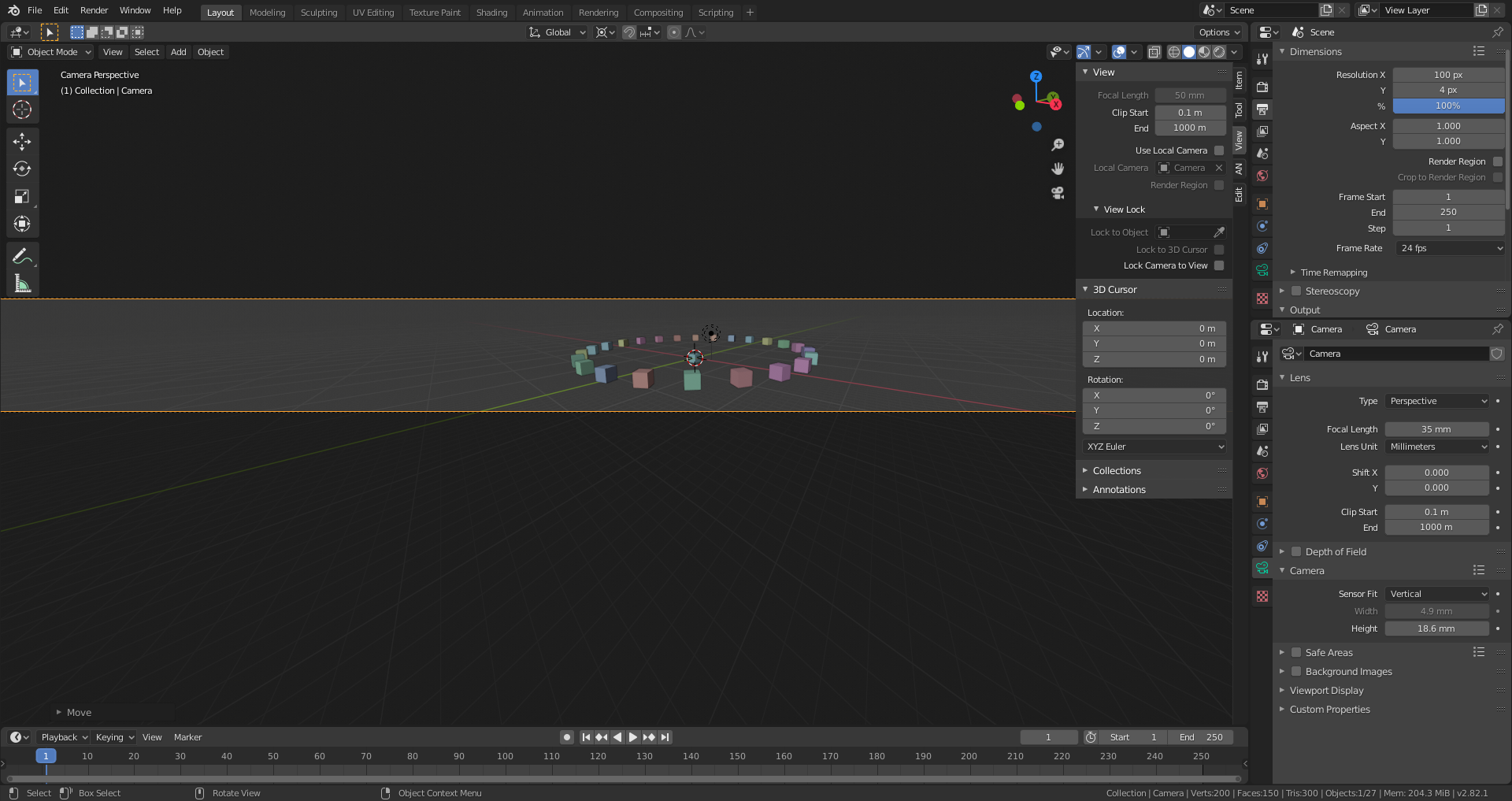

Where I'm still wondering is why in the last image from above the camera has zoomed out when switching from "Auto Sensor Fit" to "Vertical Sensor Fit" although the focal length is still 35mm and the image sensor width hasn't changed.

With a render image size of 4700 x 80 und "Vertical Sensor Fit" the output image looks as if taken with a wide angle lens at a viewing angle of 180 degrees:

I don't know whether this would be the case if there where a real digital camera where you could select such an extreme format.

With a real camera, no I don't think you can change the sensor size like this (it's a physical thing)

But I think the way this sensor size settings in blender (using the horizontal or vertical) is actually changing the way the view is mapped (transformed) onto the image plane. My guess is, all this is virtual so many things are possible in 3d, whereas in the real world you cannot transform the view matrix because...well the real world sucks when it's compared to Blender haha.

But your experiments are quite interesting, Ingmar :)

It's funny how I have just been researching all this stuff for an upcoming course. However, it won't go anywhere in-depth as you have done in your experiments here. (It will just explain what it is and then say "Leave it at default" haha)

just to chime in and maybe help, sensor size on a real camera is definitely a fixed physical thing...

it is equal to the idea of resolution, each unit is the one "pixel" element that recieves light

so all the other things you are doing is artificial in Blender...

interesting experimentation!!

Just some last thoughts and experiments concerning the "Sensor Size":

1) "Auto" defines a squared sensor. The value is identical with the "Width" value that you can define with "Horizontal Sensor Fit".

2) You can define custom sensor dimensions with the "Width" value set while "Horizontal Sensor Fit" is selected

and "Height" value set while "Vertical Sensor Fit" is selected.

3) Changing der "Render Size" resolution adapts the image to the sensor limits, changing the sensor resolution adapts the sensor to the render size limits.

4) I think the sensor settings only come into play when its about combining real life footage with computer animations. That's confirmed by the Blender manual when it says that the "Sensor Size" setting "is useful to match the camera in Blender to a physical camera and lens combination, e.g. for motion tracking. " For purely computer generated images, only the render resolution matters and the scene outcut is determined by moving or rotating the camera and zooming in or out.

5) No matter what "Sensor Size" is choosen ("Sensor Size" display switched in th active camera's "Object Data Tab"), it's always possible to render images that go beyond the limits of the camera sensor.